Midjourney Multi-Prompt Secrets, Google’s News-Writing AI, & More

Welcome to Now AI, your weekly breakdown of the most compelling developments happening in artificial intelligence and your go-to guide for tips and tricks from the experts on how to best use the AI tools that have taken the world by storm. This week we spoke to digital artist ClownVamp about ways to unlock your prompting power in Midjourney, enter the fantastical art and universe of AI-collaborative artist Jenni Pasanen, and talk about the implications behind AI-generative content tools being used by some serious media institutions.

We also touch on the dangers of using AI tools in law enforcement, how brain-to-music models are going to become a reality sooner than you might think, and why AI detection programs just don’t work. Let’s dive in.

In the Know: The Week’s Top AI Stories

Google’s News-Writing AI Tool Causes a Stir

Artificial intelligence’s ability to create convincing images isn’t the only thing worrying people about AI’s effect on the information ecosystem these days. While attention-grabbing images of Trump getting arrested certainly have their quixotic appeal, for example, just as compelling and thorny a development is media entities utilizing programs to help them pen editorial articles.

The idea divides both publishers and audiences. Some news sites have sworn off using the tools entirely; others have begun to approach them cautiously. Hell, even here at nft now, we utilize ChatGPT to help us pen news stories, and we are well aware of the potential pitfalls of doing so.

But it seems that Google has taken that idea and turned it up to 11. This past week, the tech giant has been approaching major media entities, including The New York Times and The Wall Street Journal, with a product known internally as Google Genesis. Billed as a helpmate to journalists, Genesis is said to be able to receive input in the form of details of current events and output it as news stories.

More than one Google executive who saw the pitch reportedly found it unsettling, though the company insists the product is a representation of an initiative to provide AI-based tools to help — not hinder or replace — journalists in what they do.

Without seeing the tool in action, it’s difficult to assess its accuracy and potential use cases. News-based outputs from ChatGPT are prone to inaccuracies (i.e., hallucinations). More often than not, they read like a first-year college student doing their best impersonation of a newsroom.

If Google and the media organizations planning to use these tools can work around these issues while staying committed to transparency and accountability (a big ask), they could free up journalists to spend their time on more “human” tasks. Though, the recent news of National Geographic firing its entire team of staff writers, while not explicitly tied to AI’s rise in this way, has given the publishing industry serious cause for concern.

Police Use AI to Catch Drug Trafficker, Transit Fare Dodgers

The fun part of AI’s rapid rate of development is watching new tools be used in ways that remind you of your favorite sci-fi horror stories. Okay, we’re reaching a bit, but it’s no small thing that last week, it was revealed that police in New York State used AI to catch a drug trafficker by analyzing their vehicle movements to make an arrest in 2022. The Westchester County Police Department used AI to search a database of nearly two billion license plate records in the two years prior to the arrest.

In a similar development, it recently came to light that the New York Metropolitan Transit Authority has quietly been using artificial intelligence to catch fare dodgers, with the relevant software currently active in seven undisclosed subway stations in the city. Plans are in the works to roll that out to dozens more this year.

If the thought of the authorities tracking your movements with AI is unsettling to you, you’re not alone. “Dragnet surveillance,” a term that refers to the broad usage of AI tools across a population (as in the case of the New York State drug trafficker), comes with a slew of ethical (and, in the U.S., Constitutional) questions that nobody quite has the complete answers for yet. As many have observed in recent years, without proper oversight, the tools — rather than the individuals using them — take on a certain authority, especially when their predictions go unquestioned.

As concerns about these tools’ usage rise, expect to hear the age-old argument and classic misdirection trotted out by organizations, both criminal and civil, that if you have nothing to hide, you have nothing to worry about.

AI Detection Tools Suck, Biden Forces AI Guardrails, Brain-to-Music Is Now a Thing

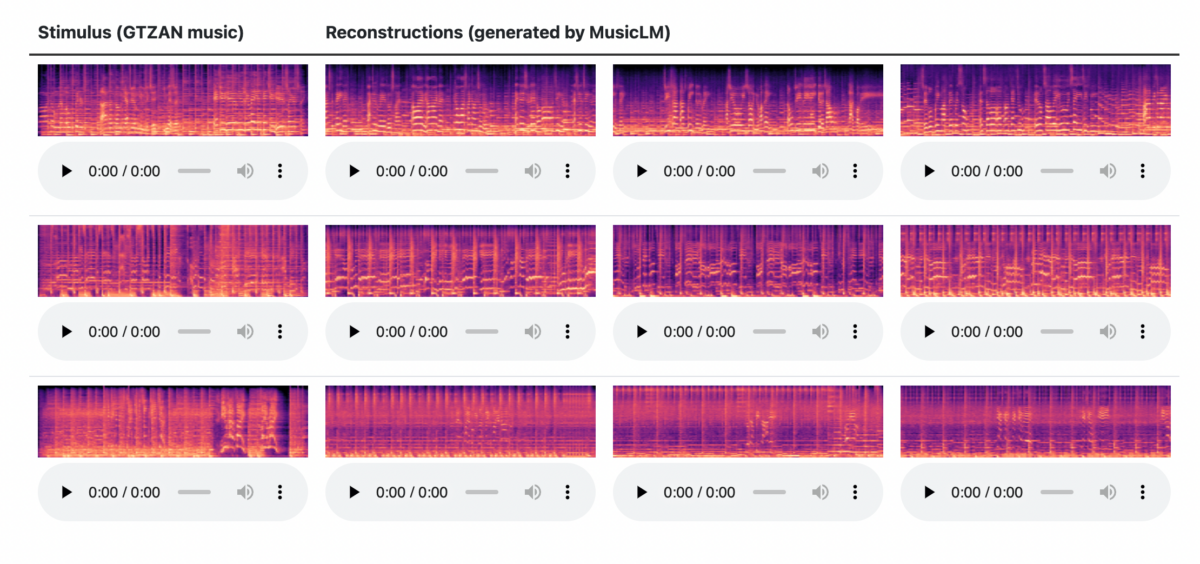

Rounding out the week’s stories, we were pumped to see Google researchers release a paper describing their “Brain2Music” model, in which they reconstructed musical outputs from fMRI readings. This follows in the footsteps of a recent study that proposed Mind-Video, a similarly themed AI approach to reconstructing video from brain readings after combining them with a custom model of Stable Diffusion. Brain-to-X functionality may sound like a distant possibility, but its foundations are being laid now.

And for those of you hoping that the swell of AI-generated content could be kept in check by AI detection tools, we have bad news for you — they’re kind of terrible at their job. So terrible, in fact, that OpenAI recently shuttered its own detection tool, AI Classifier.

Launched in January, the company wrote as an addendum to the blog post announcing the tool’s launch that “the AI classifier is no longer available due to its low rate of accuracy. We are working to incorporate feedback and are currently researching more effective provenance techniques for text, and have made a commitment to develop and deploy mechanisms that enable users to understand if audio or visual content is AI-generated.” Sorry teachers, your next-level “plagiarism” detector will have to wait.

And in a surprising (but perhaps cosmetic) display of adherence to some form of oversight and transparency in the AI world, President Biden managed to get Google, Amazon, Meta, OpenAI, and three other companies to agree to voluntary safeguards in the race to develop the technology. The companies involved made their commitment to the guardrails, which include a pledge to transparency, fighting against bias, and “watermarking” AI-generated content, during a meeting at the White House on July 21.

/Promptly: Midjourney Multi-Prompts With ClownVamp

If you’ve heard of AI image generators, you’ve heard of Midjourney. Since its debut in July of 2022, the text-to-image model has become one of the most popular programs for AI-collaborative creation in existence. With well over 13 million active users in its Discord server (a million of which are online at any given time) and new updates rolling out regularly, Midjourney is unlikely to lose steam anytime soon.

But, despite the popular misconception that programs like Midjourney simply require you to lazily type a few words to obtain an incredible result, prompt craft — the art of refining the input a user gives to an AI model — is no easy task. This is compounded by the fact that, with every new update, there are even more tools to incorporate into your prompts to get a desired image output.

For this week’s /Promptly, we spoke to the inimitable AI-collaborative artist ClownVamp about some of the most overlooked and powerful prompting techniques Midjourney users should know to up their creative game.

Discovering the Dial Prompt

ClownVamp: One of my favorite prompt techniques is the dial prompt. This is where you break up your prompt into many small tokens with custom weighting, also known as Multi Prompts. Let’s start with an example.

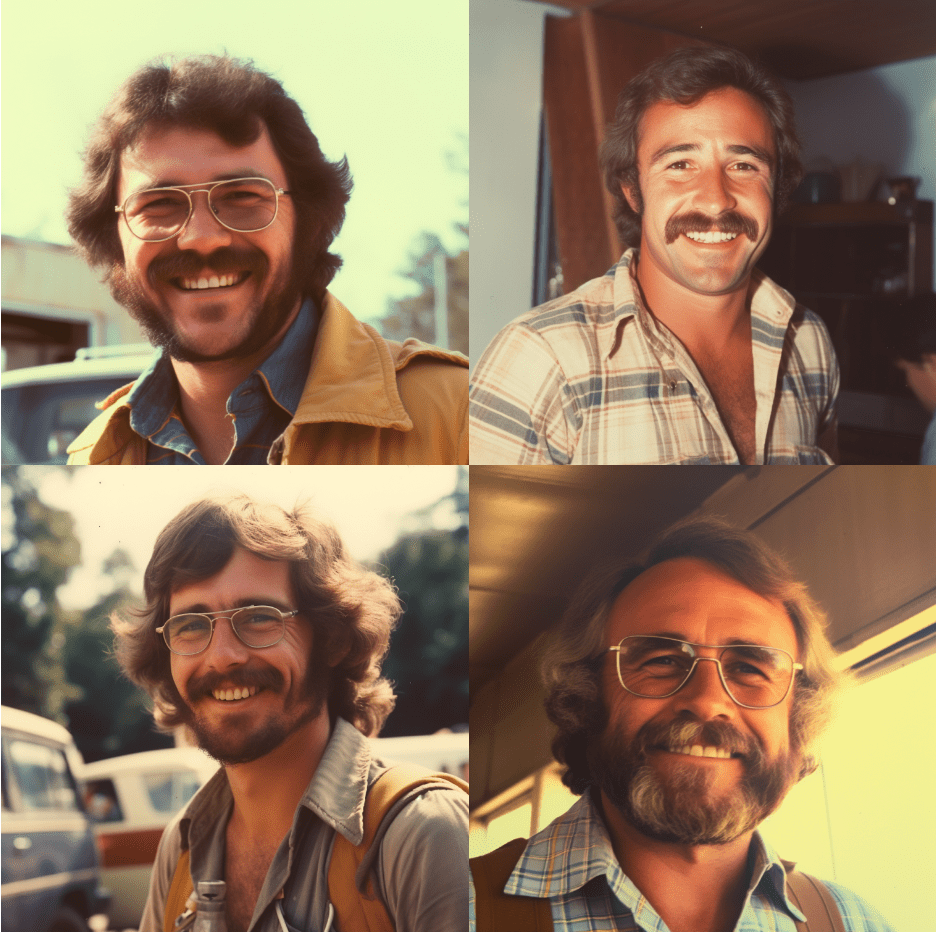

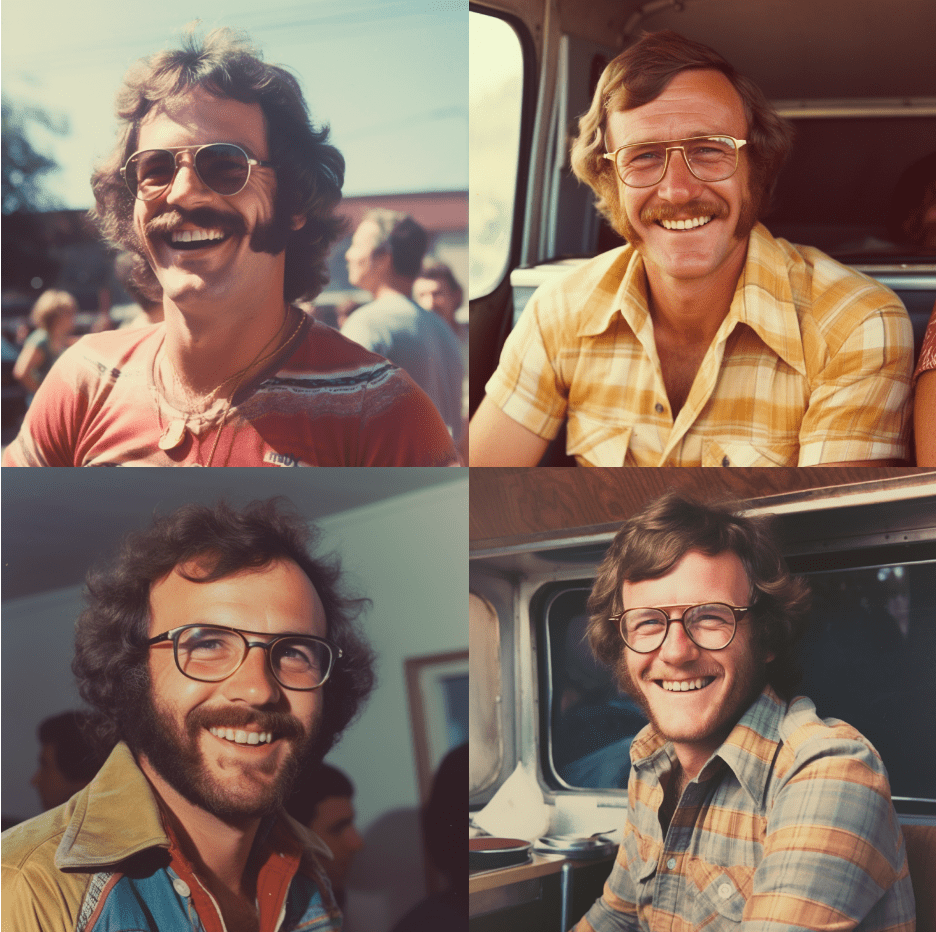

Prompt: grainy photo of a happy man in the 1970s. –q 2 –style raw

I almost always use style raw to reduce the “Midjourney look.” When you prompt this, you get a result that matches the prompt but can be a bit repetitive.

Let’s see how multi-prompting can make this better. To do this, simply separate each concept by a double colon (::). It will look like this:

Prompt: grainy photo:: happy:: man:: 1970s:: –q 2 –style raw

This on its own won’t give you good results, as it’s forcing Midjourney to evenly weight each concept (e.g., “happy” as much as “man”). You will likely get outputs that are somewhat nonsensical:

The real power comes when you start to weigh each concept separately. The default weight is 1, and you can weight each phrase anywhere between 0-2. I’ve found below .25 isn’t very noticeable, and above 1.5 is often overpowering, so I tend to spend most of my time between .25-1.

As an aside, putting a weight below 1 does not constitute a negative prompt. If you put it below 1, Midjourney still adds that aspect to the output, just to a lesser degree. If you want your prompt to reduce something, make the number negative. Let’s give our new approach a try.

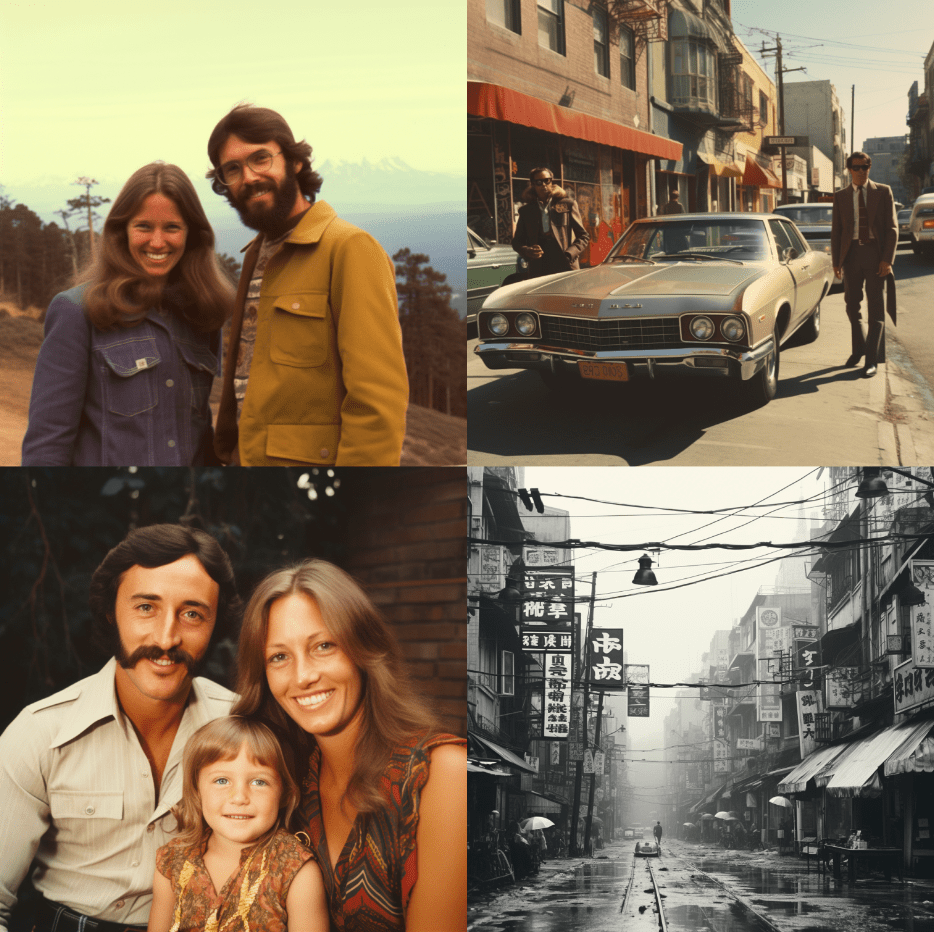

Prompt: grainy photo::1.5 happy::.25 man::1 1970s::1.5 –q 2 –style raw

Voilà! We get much more interesting results with substantially more variety. Some won’t be a perfect fit, but that’s an okay price to pay:

Why does this work to give us better, more interesting results? To over-simplify, rather than the model finding the nearest match in the latent space (and continue revisiting that exact space and the resulting repetitive images), this approach is looking at the intersection of these concepts with the relative weightings we’ve provided, seemingly putting them in tension with each other.

You can combine this approach with other tools. Let’s look more closely at the negative prompting we mentioned earlier. Let’s say I get annoyed at the model producing all these 70s-era jackets. I could add the following to my prompt:

Prompt: grainy photo::1.5 happy::.25 man::1 1970s::1.5 jacket::-.25 –q 2

Now we get more images sans all those jackets!

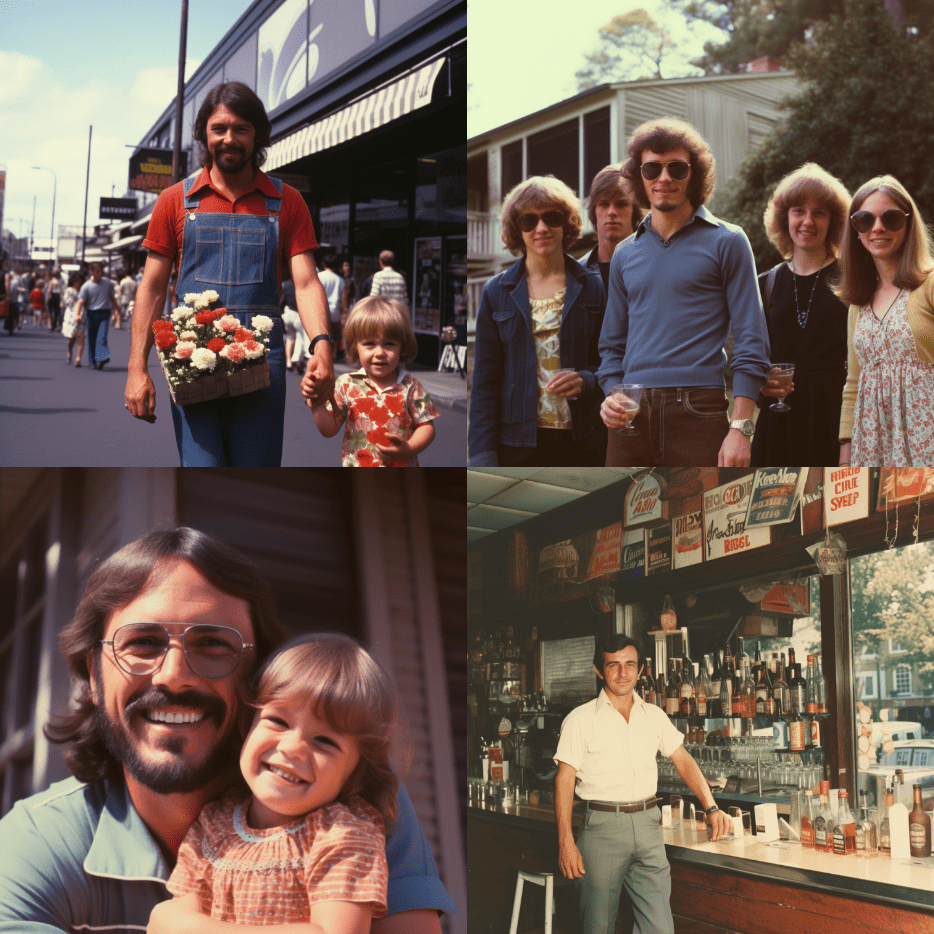

One of the best things about the dial prompt is that you can combine this with conditional prompting to quickly discover new aspects of the latent space. Conditional prompts allow you to generate multiple prompts in one go by putting variables in brackets. For example:

Prompt: grainy photo::1.5 happy::{.25,.75} man::{1,1.25} 1970s::1.5 –q 2 –style raw

This would create four outputs that test two different weights for the two categories with conditional prompts. This burns through credits, though, so be careful about doing this in credit mode.

There you have it — power prompting with dials. Start exploring the latent space with more control, and have fun.

Against the Grain: Jenni Pasanen

“Creating in the new era of art, where creativity and technology merge as one,” reads a pinned tweet on AI-collaborative artist Jenni Pasanen‘s page. There could hardly be a more apt way to frame her stamp on the art world; the Finland-based creative’s work deals in the fantastical and tangibly felt, able to transport even the most cynical viewer to a place of strange (and sometimes unnerving) everyday enchantment.

Pasanen’s background is in design and animation, and she has been experimenting with digital painting for the better part of the last two decades. She began teaching herself about the world of crypto and NFTs in 2020 and minted her first collection in March 2021.

“I was mesmerized by the endless possibilities [AI] could offer, and it immediately resonated with me,” Pasanen said in an interview with nft now this spring. “I knew I had finally found my home in art. From that moment on, I have been using AI as a part of my craft. It was the missing piece I had always been looking for.”

Few have blended the more traditional aspects of digital art with AI as well or as beautifully as Pasanen, who uses Photoshop and Artbreeder in a workflow she describes as a “layer cake” approach to creation. On July 18, Pasanen released Lope, a long-form generative art collection of 101 pieces that invite the viewer to consider the relationship between the rational and the emotional.

AI Candy

There is no shortage of incredible images and videos being created with AI these days, especially at the intersection of Midjourney and Runway Gen 2. Here are some visually compelling videos that caught our eye this week.

And it would be remiss of us if we didn’t mention the winners of Claire Silver’s fifth AI art contest, which focused on AI video and animation. Congratulations, opticnerd, Dreaming Tulpa, Gordey Prostov, Austin Wayne Spacy, and Boxio3.

‘Interference’

Fuzzy TV snippets mix with a radio confession to create an atmospheric, dreamlike experience. A homage to past analogue media as a young woman moves along the radio and TV dial. 📺📻☎️#ClaireAIContest

AI Tools:

GPT4 – sound design research

Zeroscope – video pic.twitter.com/oaw2wFzoZM— opticnerd (@_opticnerd_) July 15, 2023

Here is my submission 🙏

As humans, we comprehend time as a straight line moving in only one direction: forward, incapable of pausing or reversing.

Every moment we’ve lived through, every choice we’ve made, and all the decisions we are yet to make, are forever etched onto this… pic.twitter.com/tXG9WtuFsZ

— Dreaming Tulpa 🥓👑 (@dreamingtulpa) July 11, 2023

“SWEETNESS OF THE NEW”

(sound on)Made with Stable Diffusion, Runway, and After Effects.

Danced & Edited by me.

Music by Gray.

A tribute to one of the greatest artists to live. pic.twitter.com/yZXvZR2LuJ— austin wayne spacy (@austinspacy) July 14, 2023

Hi Claire, this is my #ClaireAIContest submission called

‘Lifeless Hues’ 🫶 All AI 🫶 pic.twitter.com/3oJTk9f3qG— Boxio3 🌼 Live Drop on FND (@Boxio3) July 14, 2023