We Tried Fox’s Blockchain-Based Tool for Deepfake Detection. Here’s How It Went

Fox Corp. made ripples in media circles on Tuesday when it announced that it was launching “Verify,” a new blockchain-based tool for verifying the authenticity of digital media in the age of AI.

The project addresses a pair of increasingly nettlesome problems: AI is making it easier for “deepfake” content to spring up and mislead readers, and publishers are frequently finding that their content has been used to train AI models without permission.

A cynical take might be that this is all just a big public relations move. Stirring “AI” and “Blockchain” together into a buzzword stew to help build “trust in news” feels like great press fodder, especially if you’re an aging media conglomerate with credibility issues. We’ve all seen Succession, haven’t we?

But let’s set the irony aside for a moment and take Fox and its new tool seriously. On the deep-fake end, Fox says people can load URLs and images into the Verify system to determine if they’re authentic, meaning a publisher has added them to the Verify database. On the licensing end, AI companies can use the Verify database to access (and pay for) content in a compliant way.

Blockchain Creative Labs, Fox’s in-house technology arm, partnered with Polygon, the low-fee, high-throughput blockchain that works atop the sprawling Ethereum network, to power things behind the scenes. Adding new content to Verify essentially means adding an entry to a database on the Polygon blockchain, where its metadata and other information are stored.

Unlike so many other crypto experiments, the blockchain tie-in might have a point this time around: Polygon gives content on Verify an immutable audit trail, and it ensures that third-party publishers don’t need to trust Fox to steward their data.

Verify in its current state feels a bit like a glorified database checker, a simple web app that uses Polygon’s tech to keep track of images and URLs. But that doesn’t mean it’s useless – particularly when it comes to helping legacy publishers navigate licensing deals in the world of large language models.

Verify for Consumers

We went ahead and uploaded some content into Verify’s web app to see how well it works in day-to-day use, and it didn’t take us long to notice the app’s limitations for the consumer use case.

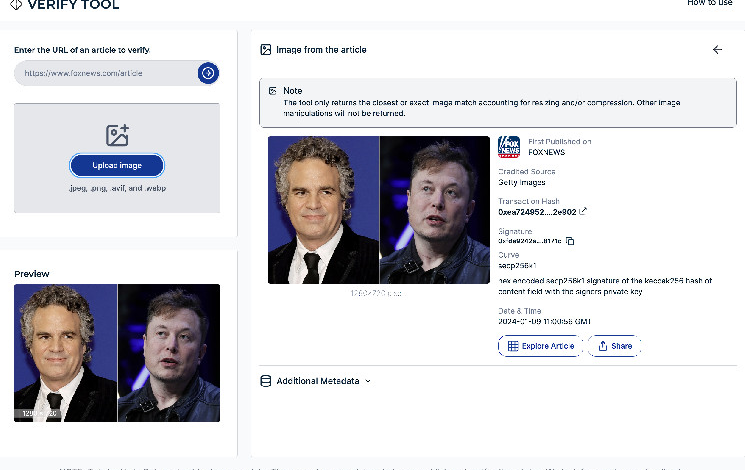

The Verify app has a text input box for URLs. When we pasted in a Fox News article from Tuesday about Elon Musk and deep fakes (which happened to be featured prominently on the site) and pressed “enter,” a bunch of information popped up attesting to the article’s provenance. Along with a transaction hash and signature – data for the Polygon blockchain transaction representing the piece of content – the Verify app also showed the article’s associated metadata, licensing information, and a set of images that appear in the content.

We then downloaded and re-uploaded one of those images into the tool to see if it could be verified. When we did, we were shown similar data to what we saw when we inputted the URL. (When we tried another image, we could also click on a link to see other Fox articles that the image had been used in. Cool!)

While Verify accomplished these simple tasks as advertised, it’s hard to imagine many people will need to “verify” the source of content that they lifted directly from the Fox News website.

In its documentation, Verify suggests that a possible user of the service might be a person who comes across an article on social media and wants to figure out whether it is from a putative source. When we ran Verify through this real-world scenario, we ran into issues.

We found an official Fox News post on X (the platform formerly known as Twitter) featuring the same article that we verified originally, and we then uploaded X’s version of the article’s URL into Verify. Even though clicking on the X link lands one directly onto the same Fox News page that we checked originally – and Verify was able to pull up a preview of the article – Verify wasn’t able to tell us if the article was authentic this time around.

We then screen-grabbed the thumbnail image from the Fox News post: one of the same Fox images that we uploaded last time around. This time, we were told that the image couldn’t be authenticated. It turns out that if an image is manipulated in any way – which includes slightly-cropped thumbnails, or screenshots whose dimensions aren’t exactly right – the Verify app will get confused.

Some of these technical shortcomings will surely be ironed out, but there are even more complicated engineering problems that Fox will need to contend with if it hopes to help consumers suss out AI-generated content.

Even when Verify is working as advertised, it can’t tell you whether the content was AI-generated – only that it came from Fox (or from whatever other source uploaded it, presuming other publishers use Verify in the future). If the goal is to help consumers discern AI-generated content from human content, this doesn’t help. Even trusted news outlets like Sports Illustrated have become embroiled in controversy for using AI-generated content.

Then there’s the problem of user apathy. People tend not to care so much about whether what they’re reading is true, as Fox is surely aware. This is especially true when people want something to be true.

For something like Verify to be useful for consumers, one imagines it’ll need to be built directly into the tools that people use to view content, like web browsers and social media platforms. You could imagine a sort of badge, à la community notes, that shows up on content that’s been added to the Verify database.

Verify for Publishers

It feels unfair to rag on this barebones version of Verify given that Fox was quite proactive in labeling it as beta. Fox also isn’t only focused on general media consumers, as we have been in our testing.

Fox’s partner, Polygon, said in a press release shared with CoinDesk that “Verify establishes a technical bridge between media companies and AI platforms” and has additional features to help create “new commercial opportunities for content owners by utilizing smart contracts to set programmatic conditions for access to content.”

While the specifics here are somewhat vague, the idea seems to be that Verify will serve as a sort of global database for AI platforms that scrape the web for news content – providing a way for AI platforms to glean authenticity and for publishers to gate their content behind licensing restrictions and paywalls.

Verify would probably need buy-in from a critical mass of publishers and AI companies for this sort of thing to work; for now, the database just includes around 90,000 articles from Fox-owned publishers including Fox News and Fox Sports. The company also says it has opened the door for other publishers to add content to the Verify database, and it has also open-sourced its code to those who want to create new platforms based on its tech.

Even in its current state, the licensing use case for Verify seems like a solid idea – particularly in light of the thorny legal questions that publishers and AI companies are currently reckoning with.

In a recently filed lawsuit against OpenAI and Microsoft, the New York Times has alleged its content was used without permission to train AI models. Verify could provide a standard framework for AI companies to access online content, thereby giving news publishers something of an upper hand in their negotiations with AI companies.